As somewhat of a novice digital marketer, I can't tell you how excited I am by the idea of programmatic SEO.

For the uninitiated, programmatic SEO is the process of generating content from a set of data, usually as part of an automated process. Information is sewn together in a human readable format that targets long tail, low volume keywords. If you have enough data you can generate hundreds or even thousands of landing pages in seconds. And since you're aiming for keywords with less than stellar search volume, there's probably little to no competition.

Imagine having 100 pages, that rank #1 for 100 different queries, that get roughly 100 - 50 searches a month. A good portion of those searches should result in a click through to the website right? Even with low search volume, enough of these pages could easily generate several thousand new visitors to your website.

I think most marketers would agree that getting traffic through search is a gold mine, but creating content is a huge pain. The idea of automating the most painful part of content creation, i.e. writing the content, would make SEO marketing 10x easier to scale than it is currently.

Imagine, an audience to put your product in front of that's easy to build and grow. Cha-ching.

I've spent some time toying with programmatic SEO, and the more I learn about it the more I see untapped potential.

So, I decided to take a deep dive into the concept with a little experiment. One purely designed to test the limits and potential of automated SEO.

Setting Up a Programmatic SEO Website

Initially I was drawn to programmatic SEO because my project, Fantasy Congress, collects tons of data, and I wanted to find a way to re-use that data for marketing purposes. But, as I eventually learned, the subject matter around this project just isn't conducive to SEO.

If I wanted to do more with programmatic SEO, I'd have to start fresh with entirely different content.

So I began to wonder: Could I create a new website that gets traffic purely from programmatic SEO? Could it get enough traffic to generate revenue? And if so, what's stopping me from making a small army of these little passive income generators?

With those questions in mind, the experiment was born.

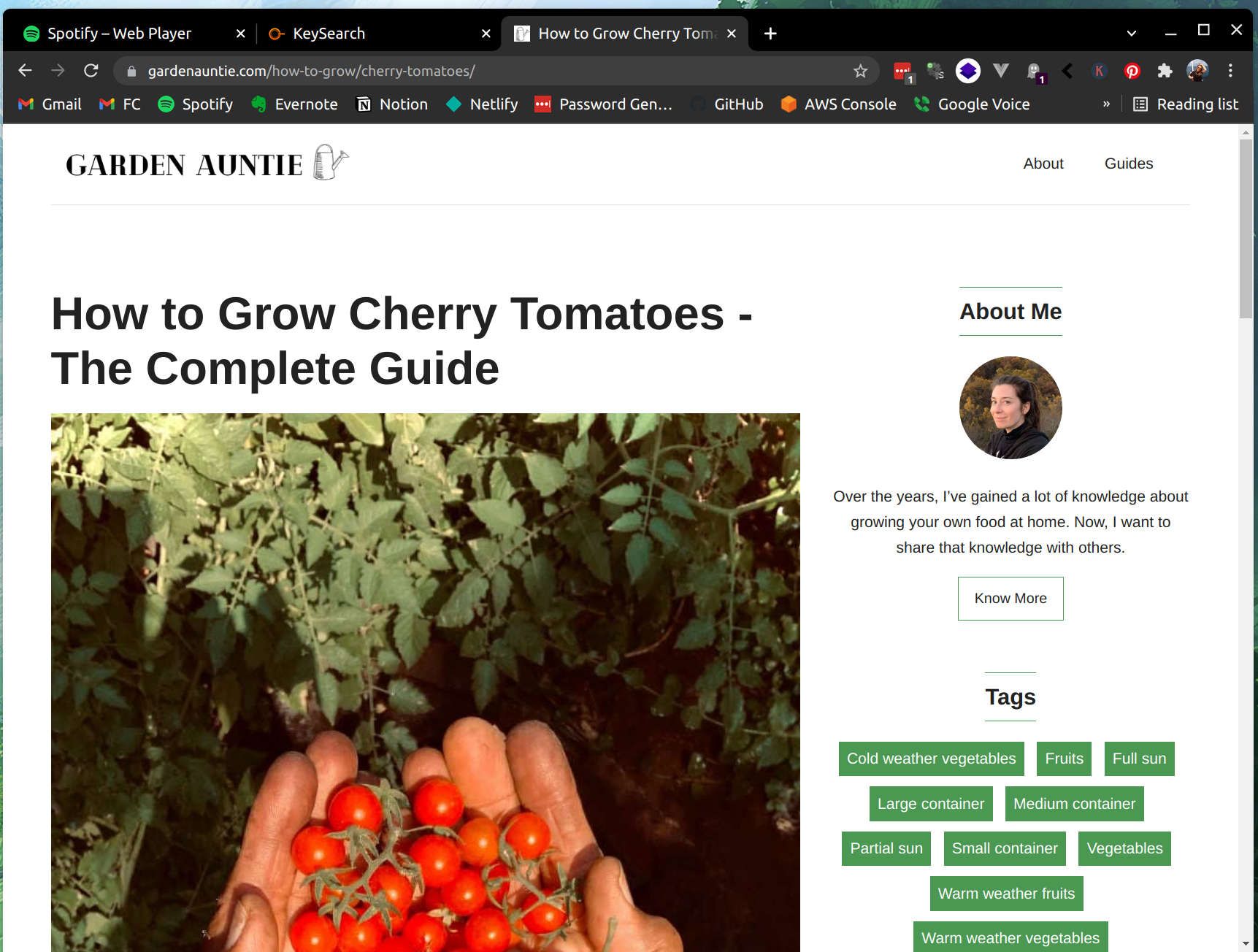

Introducing Garden Auntie

I bought gardenauntie.com from Namecheap for $10/yr, and set up a basic website with my favorite static site generator, Hugo. The website is currently hosted for free on GitHub pages.

I chose gardening because it interests me, and there is lots of data that can be collected about plants. Most of all, people are searching for this information quite a bit.

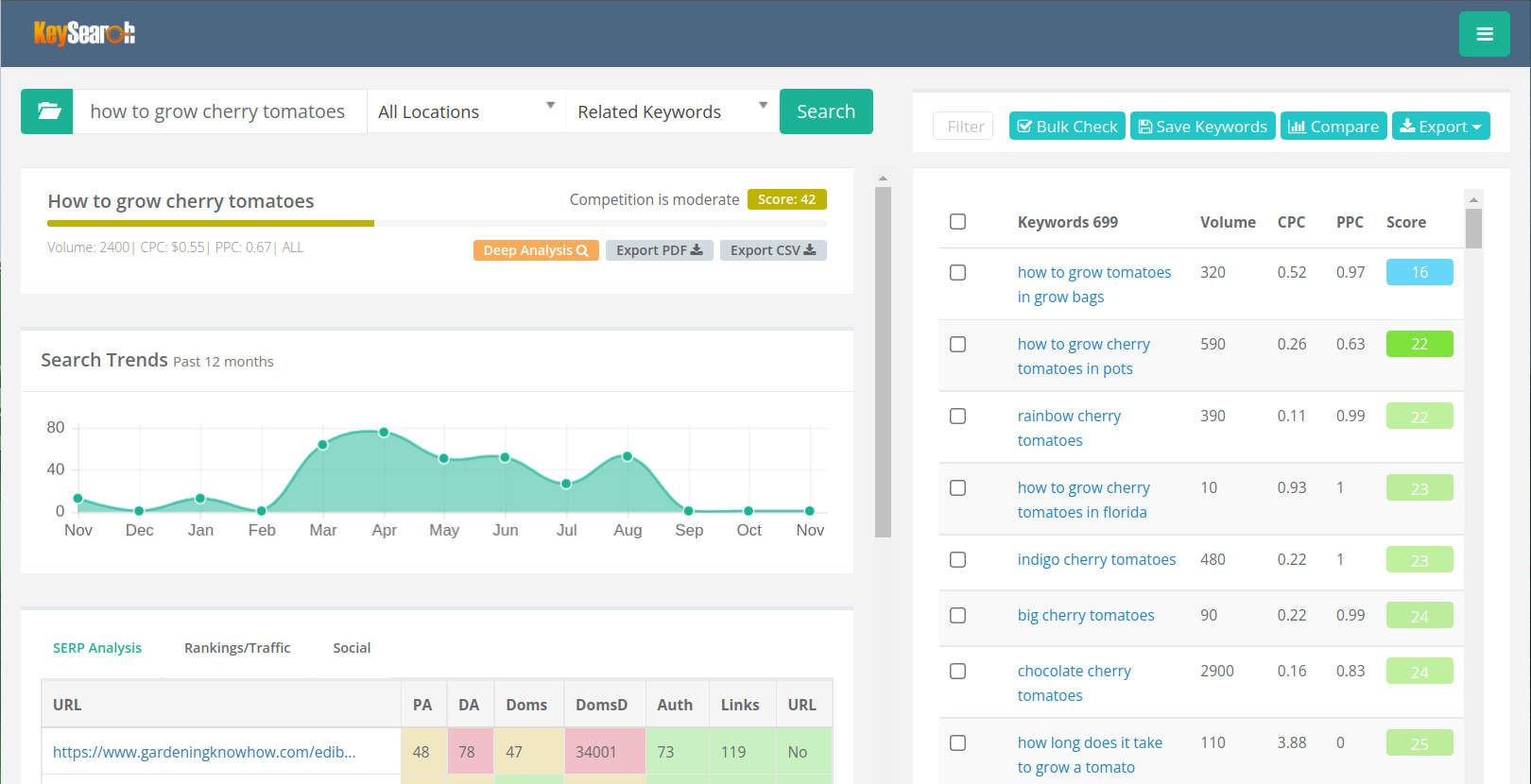

My idea was to create a template for a blog post that targets low-volume search queries shared across most homegrown produce. I used Keysearch to figure out which searches were the easiest to target across the board, and structured my "blog post" around them.

I designed a template to answer common questions such as "how to grow [insert vegetable here] in pots", or "how much sunlight does [insert vegetable here] need." With the template ready to go, it was time to generate some content.

A short detour

Like most CMS systems, the static site generator I use expects content to be "fully baked" in the form of sentences and paragraphs. It has a template system for putting the look and feel together, but this system is not well suited for taking lots of tiny data points and stringing them together into readable content.

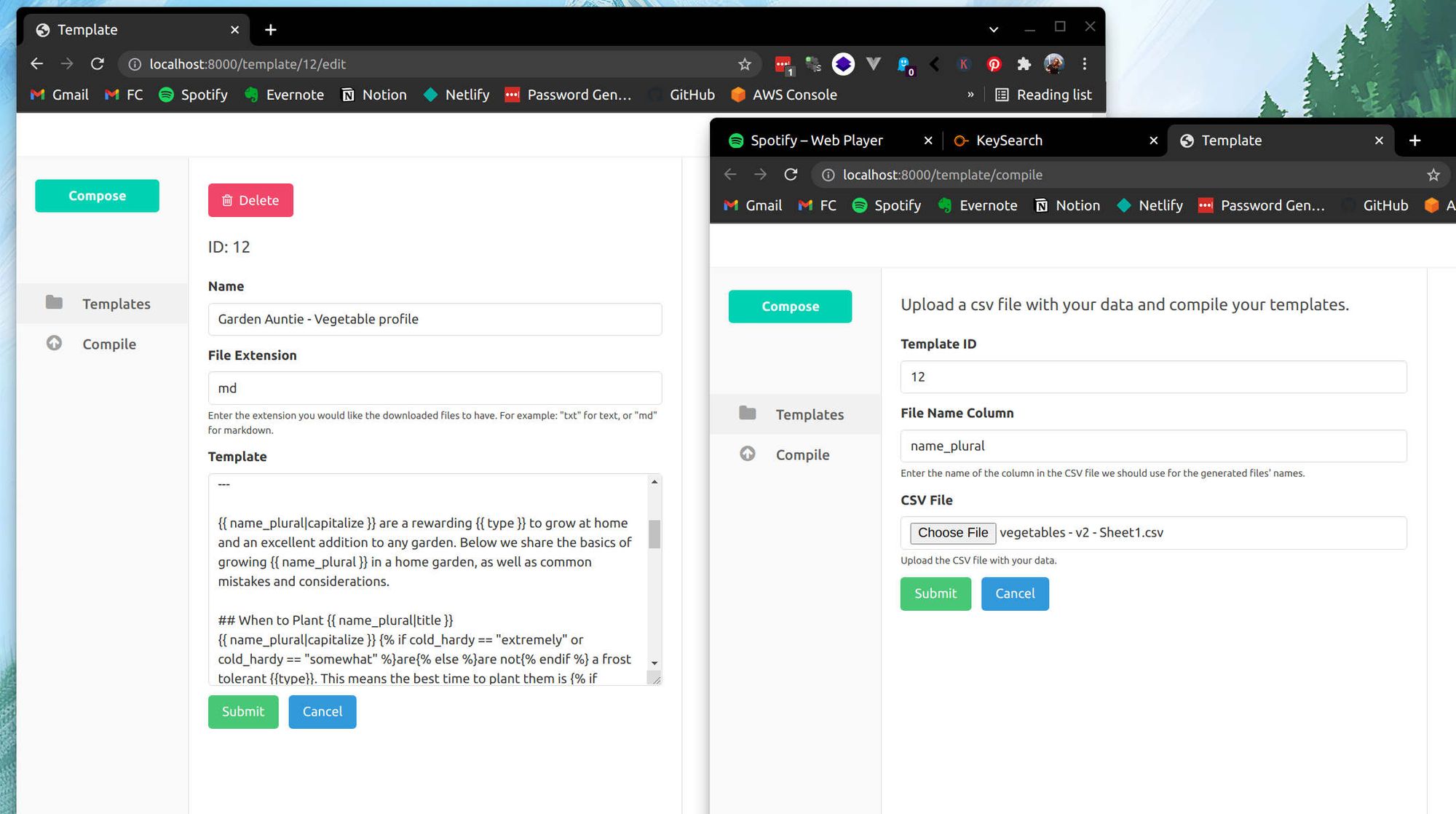

I thought I could build a different system better suited to my needs. And after a few hours of hacking, I had a simple templating app.

In the app, I can create a template, save it, then upload a spreadsheet of data to it. When data is fed to a template, a file is generated for each row in the spreadsheet.

The app processes templates using Jinja2, a template language for python. This allows me to implement extra functionality like conditional statements and filters, which helps create more variety between the generated pages.

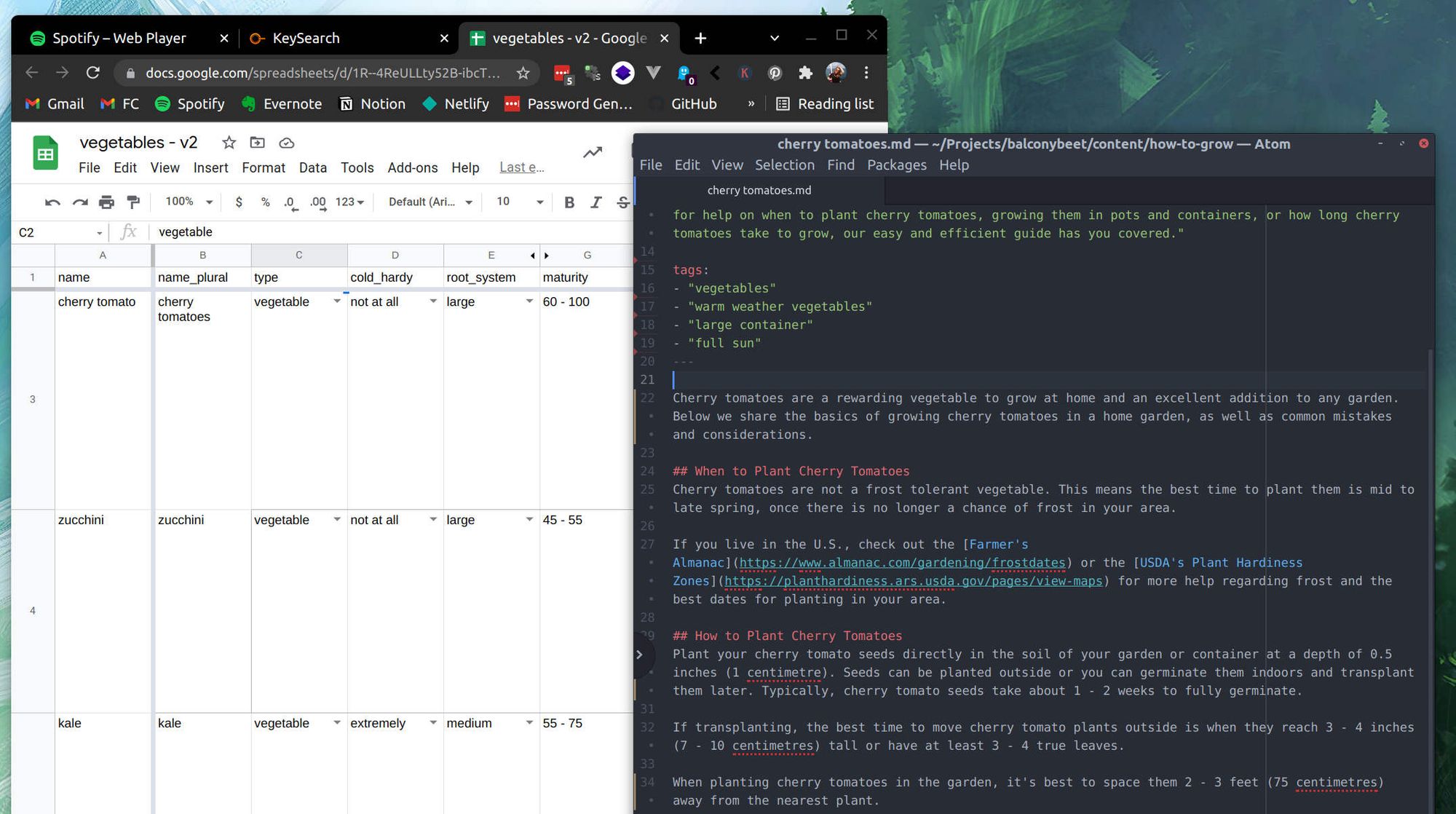

The image above shows my spreadsheet entry for "cherry tomatoes" and the final product created by the app: a Markdown file with a "blog post" about cherry tomatoes. I chose to make Markdown files from each spreadsheet row, since Markdown is what my CMS system uses to handle content.

Want to try it yourself?

I've put the app I created for this experiment online so that others can try programmatic SEO as well! Check it out at PageFactory.app.

Putting it all together

I uploaded my spreadsheet of plant data to the app and created 27 pages of content in seconds. After downloading the files and uploading them to my site, Garden Auntie was ready to go!

What's Next for Programmatic SEO?

The next step in this project involves a lot of waiting. Hopefully Google will begin indexing my site, and in a few months, the pages will start appearing in search results. If not, I'll have to try tweaking some things.

I hope to add more content to the site in that time, but this brings up the first of a few glaring issues I've noticed with the experiment so far.

The sourcing problem

First, I didn't have a spreadsheet or database of information on common garden vegetables before I started this project. This means I've gathered all the data manually from the internet. Which, to be honest, is almost as much of a pain as writing.

And this has lead to another issue. In order to streamline my data collection process, I need to collect less data.

Most potential sources for information have basic info like how much sunlight a plant needs or how far apart to space plants from each other. But more in depth information, like optimum soil ph or how much produce a single plant can yield, would require a lot more research.

A small data set that isn't very unique creates a few issues. It limits the amount of keywords I can target, and the keywords I can use are probably being targeted by other people.

What concerns me most though, is that less data makes it difficult to create substantial differences between pages of content. Right now, it's very obvious where I switched out information in each "post". This creates minor differences between the pages, but is it enough so Google doesn't recognize each page as a duplicate? I have no idea.

If I had more data, it would be easier to "customize" each page. Data collection was never a problem with my first project, Fantasy Congress. And it totally slipped my mind when thinking about a new content topic for this experiment.

If I try experimenting with another programmatic SEO website, figuring out where to source the data will be one of my first steps.

The Google problem

Of course, in order for my site to get indexed and rise in search rankings, I need to do all the traditional SEO things like get backlinks, submit a sitemap, optimize web performance, etc.

Most of these I've already taken care of. Garden Auntie pages are currently getting a green Lighthouse score close to 100.

But backlinks trouble me. I had the initial idea of looking up gardening forums and submitting the pages as answers to basic questions. Not sure if that will be enough though, or even work. I want to avoid coming off as spammy.

But, we'll see. I plan on waiting a while to ensure the pages are getting indexed first, before tackling bigger problems.

At the end of the day, this is an experiment. I'm not tied to a specific outcome, but to learning more about SEO and potential marketing strategies involving it. In 6 - 9 months, we'll check back in and see how it's progressed.